The past few years have been rough on me, in terms of “What technologies I care to and am willing to use.” There have been more than a few upsets on the (admittedly somewhat non-standard) path I was on, and I’ve been trying to think through more of “What fundamentals should I really work from?” - and if these are even remotely compatible with actually using modern consumer tech. I won’t claim this is carved in stone, fully formed, because things have ways of changing, but this is my attempt to write out some of it, requiring me to make things somewhat more concrete in the process.

I want to think through how I use technology in the modern world, how I should use technology in the modern world, and what paths are of value versus the standard default - and even against my historic defaults. Are we deciding our own paths, or are we just letting advertising and tech companies drive us down the profitable roads for them?

The Default Tech Path

If you don’t really think about technology that much, you’re likely to be on one of the “default tech paths” - the nature of it will depend on your income and desire to have the latest and greatest, perhaps, but the defaults anymore tend to be very profitable to the tech companies providing the goodies in exchange for your behavioral data, and tend to be fairly expensive. As you can afford upgrades, or every few years, buy the latest and greatest, and get rid of (trade in, pass down, or sell) your old clunker that just isn’t as fast anymore. Come on, it’s a few years old and, of course, a few OS versions behind.

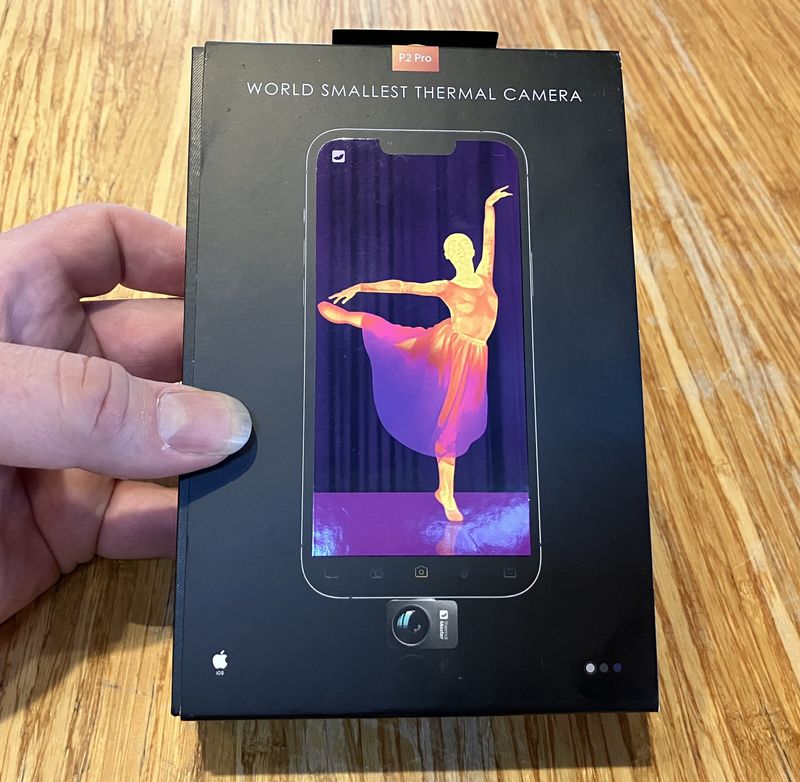

This path will typically be either Apple-centric, or Microsoft-centric (though the Apple-centric approach is usually far better integrated and works better, as long as you’re willing to tolerate them). It involves a phone, laptop, tablet, sometimes a desktop, perhaps a watch, and often more than a few various “smart” gizmos scatted around the home and car. They never quite work as hoped for, but when they come close it feels quite magical, and being able to talk to your phone and have your oven turn on does sound pretty darn cool.

Nothing beyond “Can I afford this?” and “Does it do more or less what it claims on the tin?” gets considered, and you serve as a useful conduit of both money and attention into whichever of the tech companies can manage to sink their claws into you (“engage you”) the most. The nature of the phones might differ, depending on income and desire to spend money on the devices, but this is pretty much the normal path.

My Tech Path: Yup, the Default!

My path through technology has, for the bulk of my adult life, been some variant on that default path. Sure, I’ve run weird OSes on occasion (IRIX being the Unix I learned on), I’m a bit more prone to repair a laptop than the average person (and do the weird repairs that even a lot of tech shops think impossible or absurd), but since about 2003, I’ve been in the “Apple as primary, and everything else laying around” camp.

Sure, I run Linux on the servers and some of the dev desktops, I’ve almost always had a Windows box or three around the house for whatever reasons, but the core of my ecosystem was Apple, and while I was a little bit late to the iPhone camp (3GS was my first one), I was a launch day line-stander for the iPad, I’ve had a range of iPads, I bought a M1 Mac Mini when it was announced, and I’m not really going to do the math on how much I’ve spent on unlimited cell plans over the years because I really, seriously, don’t want to know. It’s not a small number.

Some of my recent posts on things like “KaiOS flip phones” may give a hint that this has changed, though. And it certainly has. In particular, Apple and Microsoft have both succeeded in pissing me off greatly in the past year or so, to the point that I want almost nothing to do with either one of them. Intel’s been pissing me off for about 4 years now, and in some ways it feels like I’m running out of tech corners to lurk in…

Apple: What Rotted?

I am, to borrow the phrasing Apple chose to highlight in one of their internal emails, a “screeching voice of the minority.” I’ve been fine with Apple for a long while, and have owned an awful lot of their hardware over the years. My main concern was regarding their absurd butterfly keyboards, which seemed a failure waiting for an expensive and inconvenient time to happen, despite literally everyone else in the computer industry having solved laptop keyboards a decade or more ago.

Jony Ive was gone, that era of “industrial awards over function” departing (which seems to be the case - the M1 portable hardware is a welcome return to older styles of function over form), and… then Apple seems to have decided to take all their privacy capital, all their “We told the FBI to go pound sand” security goodwill, and detonate it in a glorious fireball. I’ve written before about their CSAM scanning, I’m aware they’ve “delayed” implementing the worst of it, but I simply want no part of that. And no part of a company that’s willing to consider that route in the first place. My devices are my devices, and I object (in the strongest possible terms) to them being turned against me with a “Prove your innocence” approach to content. Sorry, not OK with me.

It’s not just that, though. Sure, that stuff was the final straw, but I’d already been considering my willingness to use Apple in the future due to their behaviors in China regarding iCloud - which, roughly paraphrased, are, “Oh, yes, Sir, CCP, whatever you say, Sir! I agree, it would be a shame if something were to happen to our factories in your country, so we’ll totally put iCloud servers in your country and let you have full physical access to them through your subcontractors!” Designed in California, assembled in China, and they’ve literally no alternatives to China for assembly anymore. So, of course they bow.

It was sad to get rid of it, but my M1 Mac Mini and LG 5k monitor, which was going to be my long-term Mac office desktop, are gone, to someone from eBay. I may not be able to directly influence Apple’s behavior, but I can at least align my pocketbook and my conscience.

Microsoft: M$ Winblows!

Microsoft, meanwhile, has gone from “Windows 10 will be the last version of Windows” to “Oh, wait, no, really, we need to do this Windows 11 thing.” And, again, I want no part of what they’re doing, because of what I can read between the lines. In no particular order:

-

Win11 obsoletes a lot of perfectly functional hardware for no good reasons beyond “The PC industry is complaining about low sales,” as far as I can tell. Original Core i7-970s and the like still run Windows 10 perfectly well, and the last decade of hardware doesn’t have any problems running Win10 and various useful bits of software. So, of course, they’ve come up with some new hardware requirements that are needed for security, except when they’re not, and, well, sure, you can install it on the side, but we might not keep giving you security updates! Except we are, for now… The messaging about it is mostly nonsensical, flips around like a weathervane in a Kansas twister, and seems to be mostly about ensuring that most users will buy a new computer to run it. Great. More e-waste, which I’ll touch on later.

-

Win11 Home (and soon Pro) now requires an online Microsoft account to use the OS. Your “local account only” option during setup doesn’t exist anymore without some extensive hacking. Of course, the “I do not want a Microsoft account!” option on Win10 has been getting harder and harder to achieve, with the current builds requiring you to disconnect your network cable to get the option - and then it’ll badger you until you find the right checkbox to disable it, because they really, really want the email address of the current user. I can only assume that some sort of behavioral extraction of what you run on your desktop, tied to your email address, is quite valuable on the backend to them, and I don’t care to have my local OS use and behavior quite that “monetized” by someone else. It’s simply none of their business.

-

They had a “bug” in the beta in which Explorer (start menu, file navigation, just about everything of use requires explorer) crashed and wouldn’t operate. Why? Because there was an error in a blob of advertising JSON that Microsoft had published for some reason or another. If the ads are so tightly integrated in your OS that a bad ad blob breaks the entire OS functionality, again, I’m not OK with that. You’re no longer an operating system with that level of integration, you’re a behavioral extraction and advertising delivery system that also happens to run some programs. Of course the ads in Explorer when browsing files were “an experimental banner that was not intended to be published externally” - so, again, it sure looks like the “problem” with Windows 10 was that it just couldn’t serve enough ads for their quarterly growth targets.

So Microsoft is also out of the running. I don’t intend to run Windows 11 - and it seems I’m in decent company in certain tech circles. The endless whining about Windows 11 among technically inclined users doesn’t seem to be tapering off, and I expect it to get far worse around the time that the Windows 10 security updates stop coming.

What the Heck, Intel?

Intel is also on my “I’d rather not use them going forward” list, for the very simple reason that nobody at Intel seems to know what’s going on with their chips anymore. The past few years of microarchitectural vulnerabilities stand out here, but so does their inability to get some of their flagship features working reliably - TSX (transactional memory) and SGX (secure enclaves) stand out here as flagship features that simply don’t seem to work. Intel ships TSX, finds bugs, and then disables it later, and their 11th and 12th gen processors don’t even support SGX anymore. Good luck with Ultra HD content… unless you prefer “the usual sources.”

But, as a few of the most egregious examples of “You really don’t know what you’re doing, do you?”

-

Meltdown and Spectre were bad. Meltdown, in my opinion, was a good bit worse because it involves simply ignoring permission faults and speculating through them. It’s the sort of thing you’d do if you cared about performance over literally everything else, security included.

-

L1 Terminal Fault (also known as Foreshadow) involves, yet again, “playing through” permissions boundaries in the L1 cache and violating literally every security boundary there is. Process to process, process to kernel, guest to hypervisor, guest to guest… as long as the data you wanted was in L1, you could speculate your way to the values. This stands out because it’s in direct violation of Intel’s claims about SGX enclaves, which were the strong statement that the values being computed in an SGX enclave are secure from observation, even with a fully malicious Ring 0 environment running the enclaves. As it turns out, by utilizing L1TF, not only can you read out the entire memory contents of a production SGX enclave, you can (using other techniques) single step it and get the register values out at every single step (as they’re stored in encrypted enclave memory on enclave exit). Whoops.

-

Plundervolt (probably my favorite name in this suite of trainwrecks) violated the other assertion Intel made strong claims about - that malicious Ring 0 code couldn’t influence the operation of an SGX enclave to cause it to fault. Again, false at the time it was made. Some undocumented (that never ends well…) MSRs (system configuration registers) allowed for lowering processor voltage to improve efficiency. If the malicious outside code lowered the voltage just to the edge, when complex instructions like multiplication and AES encryption operations started to fault, they could then enter an SGX enclave, which wouldn’t check operating voltage, and would perform calculation with those faulting multiply and AES operations. That, in turn, opens a huge can of worms in which faulty operations can be used to leak key material. In production SGX enclaves. Whoops.

-

Some of the speculative vulnerabilities were quite slow to exploit - except, oh hey, this nifty transactional memory thing speeds them greatly, because you no longer have to actually bother with the OS kernel trip through the signal handler for page faults. Handy-dandy!

And so it goes. What’s apparent to me is that nobody inside Intel anymore is capable of reasoning about their chips, as a whole. There are silos of excellence, perhaps, but when your L1 cache behavior starts breaking your security isolations, or when your power efficiency tweaks allow violating secure enclave behavior, there’s a very real problem - and I’m far from convinced it’s been fixed internally.

What’s Left?

Given all this, what spaces in consumer tech are actually left to play in? Not many, it seems.

For processors, the options (at the moment, yes, I know RISC-V is coming but it’s not here yet in a high enough performance form) are AMD (staying in the x86 world), and various ARM cores. We finally have some high performance, non-Apple ARM cores, though finding them at an affordable cost is harder than one might hope, mostly due to licensing fees.

On the operating system side, I’m pretty much left with the FOSS OSes - Linux and the BSDs, with Linux having rather more general support for the sorts of things I do. On the other hand, the paranoia of OpenBSD seems to have held up alarmingly well through the past few years - and, conveniently, there’s an OS that blends the paranoia of OpenBSD with the general usability of Linux. Yes, I know this sounds like the punchline to a bad joke… but Qubes is a thing, and I’ll be going into depth on it this year.

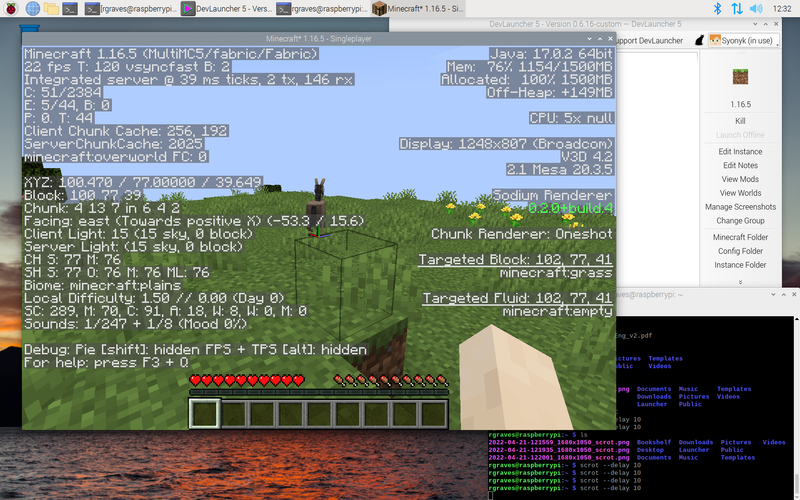

So, that’s about where I’ve ended up for the time being - some AMD systems for the high power x86 stuff, and an increasing pile of little ARM computers for my desktops. Unfortunately, my colo’d server is Intel - I wasn’t able to make the AMD hardware reliable during my burn-in testing and rather than fight it endlessly, I went for something that I could actually get racked up and online - and I’ve been making pretty good use of it for communications, hosting, games, storage, etc. My house desktop is also Intel, though that may be changing soon enough.

However, I’m still not done with this post. There are still some other things related to technology I’ve been considering, and the rest of these are quite a bit more open, in that I simply don’t have great answers for the best way to accomplish them (or if they can even be accomplished).

Minimum Surveillance

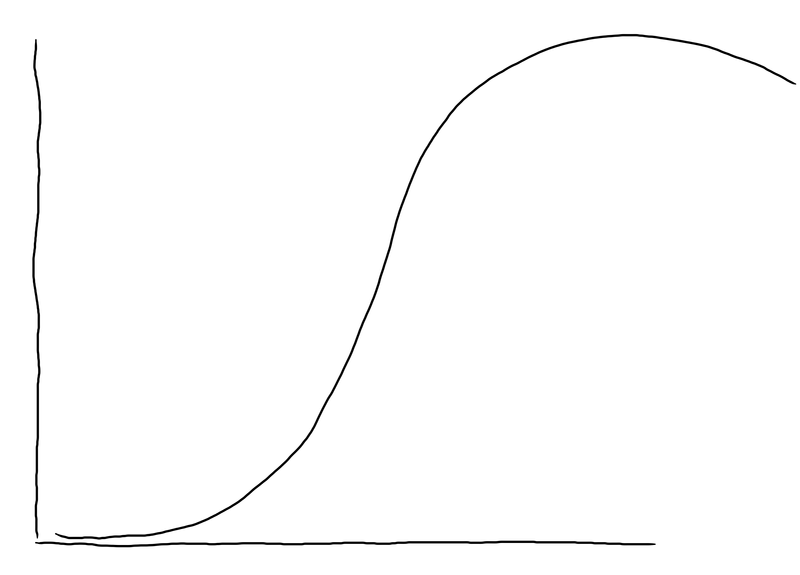

At this point, I expect at least some of my readers are familiar with the concept of surveillance capitalism, as developed at length and with no shortage of invented words in Shoshana Zuboff’s book, The Age of Surveillance Capitalism. If you’ve not read it, even though it’s a somewhat vile slog at times, it’s well worth the trouble to get through. It maps a way of thinking about our modern tech overlords and ecosystems in a far better defined and useful way than “If it’s free, you’re the product” - because, in her argument, you aren’t really even the product. You’re the mine of raw materials, and the target of the advertising or behavioral modification, but you’re neither the customer nor the product in our modern systems.

It’s a quite depressing read in many ways, but there are plenty of concrete actions one can take to try and reduce how much one is providing, directly or indirectly. My blog no longer requires Javascript, no longer uses Google Analytics (I use CloudFlare Analytics, under the logic that as I’m using them to front the pages in the first place, they could already gather whatever data they wanted, and it’s cookieless and minimal, and if you block it, good for you - I fully support this!), and is self hosted, after the process of getting clear of Blogger. I’m also trying, in various ways, to have spaces for discussion that are less-tracked (see my forum), and I’ve been experimenting quite a bit in the past few years with the Matrix communication protocol as a way of having reliable, modern communications hosted entirely outside the grubby paws of the large tech companies. The bulk of my communications is now between Matrix clients and my server, not even having to go out into the larger federated space - and certainly not having to go through Google, Facebook, or any of the other large companies. I’ll probably talk about this more in the future too.

So, I think a reasonable path one can take is to try and aggressively minimize the amount of behavioral surplus one spins off in collectible forms. Part of this can be accomplished with browser extensions, but a lot of it simply involves “not doing those things that generate a huge amount of surplus.” No social media apps on your phone (you don’t want to know what all they collect just in case), and, ideally, none of that in your life at all (yes, I do mean the smartphone, too!). If it’s going to be there for some reason or another, silo it aggressively into isolated browsers, and perhaps try to avoid sharing IPs with too much other activity in whatever ways you can. Qubes offers some helpful ways to do this.

Yes, this means there are things you can’t do with consumer technology - or shouldn’t do. And more and more, I’m OK with that.

Minimal Financial Cost

Another aspect one might consider is how to minimize the financial cost of the sort of tech one uses. One good way to not do this is to always buy new hardware, top of the line, and upgrade every year or two. That means you’re always eating the depreciation of new hardware, which tends rather exceedingly expensive (although if you need top of the line hardware, this isn’t the worst option out there - years ago, it was known on Ars as the “Jade Plan” and it worked fairly well). Buying some used, few-year-old hardware every now and then, and running it until it no longer functions, is a lot cheaper - and involves a lot less temptation to do the sort of high end things that are money pits anyway. If you can’t even run basic VR programs, you’ve got a lot less incentive to sink a ton of money into headsets, walkers, and the assorted other trappings of that particular hobby.

You can also, almost always, get away with a lower tier internet connection than a lot of people think required. I’ve enjoyed seeing how many things “I can’t possibly do” in discussion threads regarding internet connection speeds and “human rights.” Strangely, I have no problem doing almost all those things, though my connection speeds are less a factor of cost and more a factor of what I can get around here. I’m not doing terribly well on this front, mostly because I do need reliable and somewhat fast internet for work - but I’m also pulling some strings, pretty hard, about how to start up my own little community ISP as soon as I can get some actual bandwidth out here.

Foreign Workers

Still think regular device upgrades are a good idea? Go read through “Dying for an iPhone” by Chan, Selden, and Ngai. It looks at the way Foxconn, in particular, but Chinese factories and the tech contractors in general, treat their population. “Slavery” is a good enough description, even though there are some token wages - and that’s before you get over in the literal, actual slavery going on in certain corners of China involving the raw materials that feed into the “You can’t prove it’s actually slavery!” ends of production.

Long gone are the days when we had computers made by skilled workers in western nations - and while the tech goodies have gotten cheaper (or the profits have gotten larger…), there remains a huge amount of genuine worker abuse that goes into our cheap goodies.

Does there exist a way to actually justify this from any sort of ethical first principles? I’m fairly sure there isn’t… so it remains an open question as to if the use of anything we would consider “consumer tech” is actually any better than the slave owners so reviled as of the last century and a half. It’s just that the modern slaves are in other countries, and we can pretend they’re being lifted out of poverty (by being ripped free of their family and then having to pay to live in company towns that suck back just about all of their wages - it really is along the lines of the old mining towns, just with a larger supply of bodies to throw at the problem and less immediately obvious bodily harm than a mine collapse). By forcing them to build us shiny gadgets they’ll never afford, we’re helping them! Or something of the sort. I’m legitimately not sure you can build a case for any of modern tech, the way we do it, ethically.

Minimum E-Waste Impact

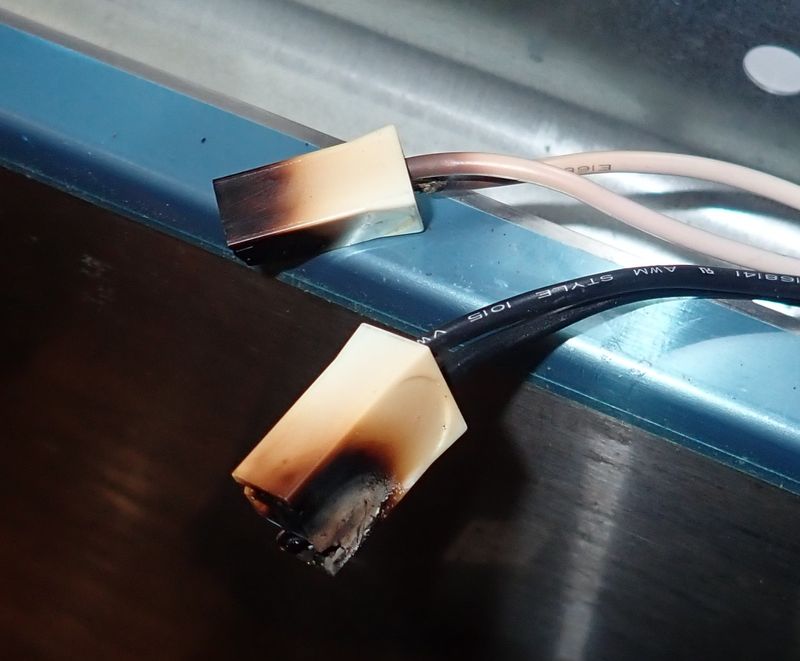

Of course, that sort of abuse is on the supply side of things - mining and refining the raw materials, building the equipment, etc. What happens when a device is discarded is often even worse. “Recycling” is often just a thick shiny coat of toxic green glop over the actual reality, which is better described as “being shipped to a third world country to someone who claims they’re a recycler, who burns the e-waste in open air pits to recover the trace metals they can sell for very little.” I don’t suggest being downwind of a modern electronics fire - yet, there are parts of the globe in which this is just what the air consists of. It’s absolutely as unhealthy as it sounds.

Remember, every new piece of consumer electronics is a piece of e-waste that will eventually happen somewhere, to some human on the planet. Will it be “properly” recycled (if you squint really hard, you might be able to imagine some future in which that’s a thing)? Will it be landfilled to leech toxic substances out over time? Will the lithium battery start yet another fire in a recycling facility? Will it get burned in a pit?

The best thing one can do here, as far as I’ve been able to come up with, is to buy as little as you reasonably can, repair it as much as possible, keep it running as long as you can, and then try and find another interested user once you’re done (either using it as an item, or making use of the parts to repair other similar items). I’m still able to use that gutless netbook Clank for a wide range of things, and, in fact, am typing this post on it. Is it a bit of a hassle to keep running smoothly (or at all)? Yeah, sometimes. But it’s still got a nice keyboard!

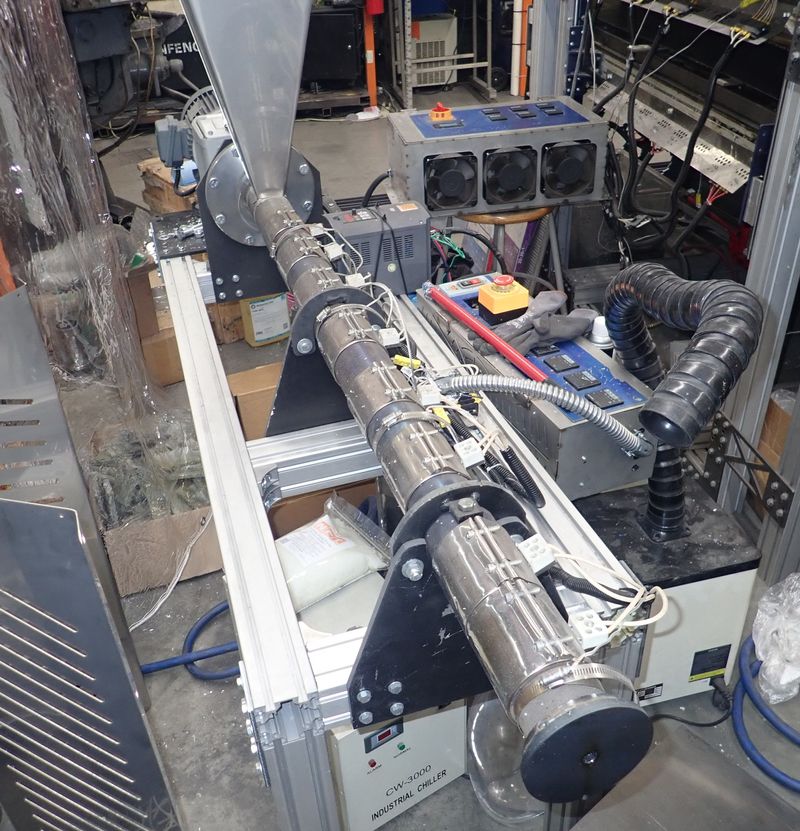

Really, though, I don’t struggle too much with my ewaste output. I’ve long since learned that one man’s ewaste is another’s food money, and I’ve made useful amounts of cash for large parts of my life intermediating the ewaste stream for profit. I used to buy broken laptops and repair them, or part them out (value of a broken laptop worth of parts on eBay is well north of zero), and I’ve done some pretty entertaining stuff in the past to try and repair broken machines - quite successfully, almost all the time. iFixit is great, and they provide wonderful resources to help reduce your waste by fixing things.

The downside to running things longer is that you end up being out of the security patch window. I’ve generally avoided this in the past, but as has been pointed out to me by various people in the past few years, the alternative is to simply not keep anything of interest on your devices that are out of the patch windows. If your phone no longer receives security updates, perhaps it’s time to remove all your assorted accounts from it, and simply use it as a telephone call and texting endpoint. Security breeches matter less if there’s nothing interesting to find…

Thing I Can’t Do

The usual response, if I start talking about this sort of thing, is for people to immediately come up with the things I can’t do with my hardware, and drill down on those. “But what about…” question are common, and my answer to them is simple: “If I can’t do something with the hardware and software I’m willing to run, then, I suppose the answer is that I simply don’t do those things.” Have I given up a range of capabilities by moving from an integrated Apple ecosystem to a flip phone and a ragtag assortment of Linux hardware? Absolutely. But, by doing so, I don’t have to find myself in the common (and comfortable, as long as you don’t stop to think about it) position of “Oh, yes, company X is awful - I hate that they’re doing Y. But, I mean, I can’t live without it, so I suppose I’ll complain on social media some and then keep buying their hardware/software, keep feeding them my behavioral surplus, etc.” That’s a pretty weak response - but it’s certainly common. Just how many people who were furious about Apple’s CSAM scanning things actually stopped using Apple hardware as a result? I expect Apple hasn’t even noticed.

As I do less and less with my hardware, I find that I care less and less about the stuff I’m missing. I’ve got email, I’ve got text chat, I’ve got ssh, I’ve got a more or less working browser (though lately, for lower and lower values of “working” - NoScript is an awesome way to figure out which sites aren’t worth bothering with), and I can do writing, photo editing for blog posts, and things like that. If I can’t play AAA games, well, I guess I don’t need to play them. If I can’t do studio editing of multiple 4k video streams, I suppose I’ll survive without that as I have for most of my life so far. And so on.

Take some time to think about your tech philosophy, though. I’d love to hear what other people have developed along these lines.

How have we gotten here, though? I have some thoughts on that, too - and I’ll address those in two weeks.

Comments

Comments are handled on my Discourse forum - you'll need to create an account there to post comments.If you've found this post useful, insightful, or informative, why not support me on Ko-fi? And if you'd like to be notified of new posts (I post every two weeks), you can follow my blog via email! Of course, if you like RSS, I support that too.